At a recent Google IO talk, there were some key topics which came up which I found were useful and wanted to share. The videos are below.

The key things is Google bot is using the latest version of Chrome for Rendering it was on Chrome 41 over 3 years old, which is great news as allows developers to use more of the latest tools available and not have to backfill in to make sure the site works for Googlebot.

Zoe Clifford from Google also confirmed that it will be evergreen so when a new version of Chrome is released the bot will follow suit, there plan is to follow a few weeks later (but making no promises) but I can cope with a few weeks, much better than a few years.

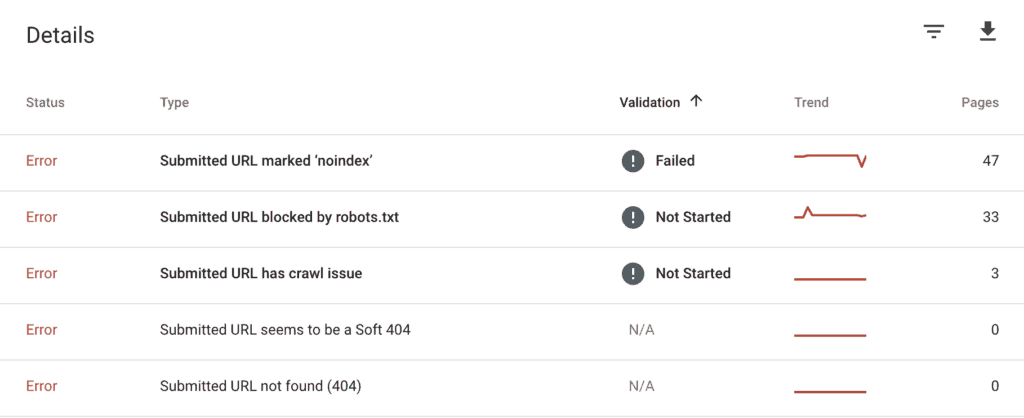

The two key points, which I think are most useful to the majority of SEO’s are what does the below error messages mean in Search Console.

As well as using their answers I’ve also tried to add in when I find them useful and how i try and fix the issues.

Discovered but Currently not indexed

When analysing Google Search Console you might come across this warning. “Discovered but currently not indexed” and wondered what it means.

It’s potentially because your crawl budget is too low for Google to index and evaluate these pages.

Crawl Budget is a rate limit applied by Google on how much of a site Google can crawl (we have covered this before and while Google seems to have an unlimited amount of cash reserves, it doesn’t have an unlimited amount of crawl budget).

Therefore each site is giving a limit and this can change over time, the more popular and useful your site is the higher your budget.

Toms new Blog will start off with a very low budget, however, the BBC as its seen as trustworthy news source will have a much higher budget, plus it’s producing a large amount of content frequently.

Another reason Google claims to limit is to be a good bot and not to hit your server too much too soon and bring down your website, I have seen other bots which are far more active, things like Bing bot are far more active than Googlebot.

Changes to make to improve Crawl Budget

Here are some of the many things you can do to reduce crawl wastage, things like internal redirects (that’s two or more pages Google must load before it gets to the final URL). You control the site to fix it.

Similar to internal broken links, why send Googlebot to a dead page. Either remove the link or point them to a resource which is life.

Slow loading pages, Crawl budget isn’t set a number of pages and it does change frequently. Quick loading pages do allow Google to crawl more pages. A faster loading site does increase crawl budget.

Crawl Budget During Rendering

Resources requested during rendering do count towards your crawl budget, so keep this in mind when developing and optimising sites.

Things which are classed as requests include but not limited too javascript files, xhr tags or fetch requests.

This wasn’t something I wasn’t aware off so is useful information to know.

So limit the number of requests you make on every page, but if you have certain resources across multiple pages, these are cached so wouldn’t count towards your crawl budget.

Confusing I know so let’s keep it simple:

- Crawl budget is important and some pages might not be rendered if you run out of crawl budget (if it’s not rendered it won’t appear in the SERPs)

- Requested resources count towards your crawl budget

- Google cache’s common assets on your site and therefore they don’t count towards crawl budget.

So Google is aware of these pages.

Soft 404 Errors in Google Search Console

Soft 404 basically is the 200 response header was returned but the content on the page looks like a 404, hence the soft part.

Google thinks this page is a 404 page, but you return a 200 response.

I’ve found this useful in the past when analysing websites. To fix this I usually update the content and the page. It happens a lot on ecommerce websites and category pages, these pages are super important and are usually high traffic pages so well worth watching out for these and fixing when you see them.

This was discussed at the recent Google I/O and you can see the clip below. While there isn’t a lot of new information in here (apart from Googlebot using the latest version of Chrome and will be evergreen going forward).

Discovered but Currently not indexed Video:

Soft 404 Errors in Google Search Console:

Its worth watching the keynote, its full of some very useful information.

One thing I want to make clear is Crawl budget won’t really affect your 50 pages affiliate site. Google should crawl every page pretty frequently (worth checking) but it becomes more of an issue the bigger your site becomes.

The majority of the crawl information was covered in this google article on Googlebot.

If you have any specific questions on Server Log Analysis or Googlebot please ask in our Facebook Group